RTX tensor/RT cores used in FAH?

Moderator: Site Moderators

Forum rules

Please read the forum rules before posting.

Please read the forum rules before posting.

RTX tensor/RT cores used in FAH?

Are the RT or tensor cores used in FAH on RTX cards?

And if not, would enabling some sort of software modification allow the cards to fold faster?

And if not, would enabling some sort of software modification allow the cards to fold faster?

-

foldy

- Posts: 2040

- Joined: Sat Dec 01, 2012 3:43 pm

- Hardware configuration: Folding@Home Client 7.6.13 (1 GPU slots)

Windows 7 64bit

Intel Core i5 2500k@4Ghz

Nvidia gtx 1080ti driver 441

Re: RTX tensor/RT cores used in FAH?

Currently not because FAH uses single precision and some double precision while tensor cores use half precision. But if someone finds a way to use these cores too then maybe.

-

toTOW

- Site Moderator

- Posts: 6539

- Joined: Sun Dec 02, 2007 10:38 am

- Location: Bordeaux, France

- Contact:

Re: RTX tensor/RT cores used in FAH?

They will probably never be used since they don't work with enough numeric precision ...

-

foldy

- Posts: 2040

- Joined: Sat Dec 01, 2012 3:43 pm

- Hardware configuration: Folding@Home Client 7.6.13 (1 GPU slots)

Windows 7 64bit

Intel Core i5 2500k@4Ghz

Nvidia gtx 1080ti driver 441

Re: RTX tensor/RT cores used in FAH?

If RT cores get more powerful in the future and also used by AMD maybe some mathematical tricks can calculate higher precision using several low precision operations?

Re: RTX tensor/RT cores used in FAH?

Yes, it's possible to perform enough mathematical tricks to avoid using DoublePrecision if the hardware doesn't support it, but that slows down processing. The same is true if you were trying to avoid using single precision hardware (but only if there actually were hardware that didn't support Single Precision). Choosing the right tool to accomplish the job is what consistently benefits science. -- especially since those tricks would require FAH to exclude assignments to all of the non-RTX hardware that is happily folding today.

Posting FAH's log:

How to provide enough info to get helpful support.

How to provide enough info to get helpful support.

Re: RTX tensor/RT cores used in FAH?

According to nVIdia:

I think the majority of the GPUs folding are nVidia's, and it will remain to be so for a while (seeing that newest AMD offer RX 5700 will be only about as fast as an RTX 2070, but without power optimizations will fold at a significantly more power draw).

I wonder if it's feasible if those RT cores can be seen as a separate GPU (thread or WU) assigned to a specific GPU, in FAH?Turing Tensor cores significantly speed up matrix operations and are used for both deep learning training and inference operations, in addition to new neural graphics functions.

If FAH recognizes a card has RT or Tensor Cores, perhaps some 'low precision' WUs could be assigned to it, in an effort to maximize GPU efficiency or usage?Turing Tensor Cores add new INT8 and INT4 precision

I think the majority of the GPUs folding are nVidia's, and it will remain to be so for a while (seeing that newest AMD offer RX 5700 will be only about as fast as an RTX 2070, but without power optimizations will fold at a significantly more power draw).

-

toTOW

- Site Moderator

- Posts: 6539

- Joined: Sun Dec 02, 2007 10:38 am

- Location: Bordeaux, France

- Contact:

Re: RTX tensor/RT cores used in FAH?

INTx is integers ... we work on floats ... it's fundamentally incompatible (or very slow with emulation of floats with integers).

-

foldy

- Posts: 2040

- Joined: Sat Dec 01, 2012 3:43 pm

- Hardware configuration: Folding@Home Client 7.6.13 (1 GPU slots)

Windows 7 64bit

Intel Core i5 2500k@4Ghz

Nvidia gtx 1080ti driver 441

Re: RTX tensor/RT cores used in FAH?

Nvidia Tensor Cores can also do floating point mixed precision FP32/FP16 which means muliply 4x4 matrix FP16 and output FP32

https://www.nvidia.com/en-us/data-center/tensorcore/

https://www.nvidia.com/en-us/data-center/tensorcore/

-

JimboPalmer

- Posts: 2521

- Joined: Mon Feb 16, 2009 4:12 am

- Location: Greenwood MS USA

Re: RTX tensor/RT cores used in FAH?

Right, if they did FP32 in and FP64 out they would be useful to the current F@H cores. Unless there are other models Pande Group wishes to pursue, they are not useful for the current math.

Tsar of all the Rushers

I tried to remain childlike, all I achieved was childish.

A friend to those who want no friends

I tried to remain childlike, all I achieved was childish.

A friend to those who want no friends

Re: RTX tensor/RT cores used in FAH?

Aren't INT calculations more precise than floating point?

I think it would be possible for new projects to include tensor core calculations. Perhaps some projects out there will be helped by it.

I think it would be possible for new projects to include tensor core calculations. Perhaps some projects out there will be helped by it.

-

JimboPalmer

- Posts: 2521

- Joined: Mon Feb 16, 2009 4:12 am

- Location: Greenwood MS USA

Re: RTX tensor/RT cores used in FAH?

Integers are whole numbers. 2 is always 2 11 is always 11 there is no 15.5

Floating point are decimals (in Binary, not sure what binary decimals are called)

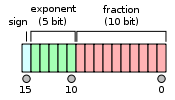

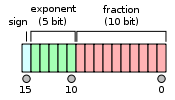

So in FP16, you have 10 bits of accuracy to make 2 = 2.00, 11 = 11.0 and 15.5

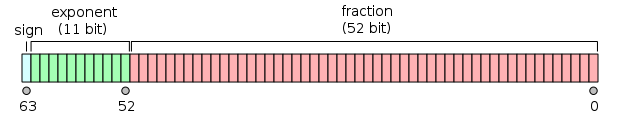

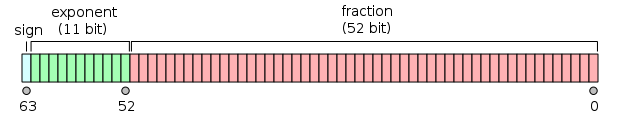

In FP32, besides a much larger exponent, you have 23 bits of accuracy to make 2 = 2.00000, 11 = 11.0000 and 15.50 = 15.5000

I am not sure the exponent is the issue, but when doing repetitive math, (and the GPU Core does high speed repeated math for hours) you need a lot of accuracy so the rounding errors do not bite you.

FP64 has a larger exponent again but importantly, much greater accuracy

In FP64, besides a much larger exponent, you have 22 bits of accuracy to make 2 = 2.000000000000000, 11 = 11.000000000000 and 15.50 = 15.5000000000000

Floating point are decimals (in Binary, not sure what binary decimals are called)

So in FP16, you have 10 bits of accuracy to make 2 = 2.00, 11 = 11.0 and 15.5

In FP32, besides a much larger exponent, you have 23 bits of accuracy to make 2 = 2.00000, 11 = 11.0000 and 15.50 = 15.5000

I am not sure the exponent is the issue, but when doing repetitive math, (and the GPU Core does high speed repeated math for hours) you need a lot of accuracy so the rounding errors do not bite you.

FP64 has a larger exponent again but importantly, much greater accuracy

In FP64, besides a much larger exponent, you have 22 bits of accuracy to make 2 = 2.000000000000000, 11 = 11.000000000000 and 15.50 = 15.5000000000000

Tsar of all the Rushers

I tried to remain childlike, all I achieved was childish.

A friend to those who want no friends

I tried to remain childlike, all I achieved was childish.

A friend to those who want no friends

Re: RTX tensor/RT cores used in FAH?

It depends on how you define "more precise"Theodore wrote:Aren't INT calculations more precise than floating point?

While the computer is working in binary, I'm going to talk about things in decimal simply because it's easier to comprehend. The same concepts apply.

Let's suppose we're doing financial calculations and you've decided since money, rather than being enumerated in dollars, should be store simply as cents Note: There's no coin that represents a fractional cent, you can always calculate things in cents and then display it in dollars and cents.

if you have an account balance of $ 12,345,678.90 that number is easily stored as an exact integer value. Now assume that the bank pays you interest of 1% per month, which would have to be stored as either as 0012345678 cents or as 12345679 cents since there is no such thing as 1% of your 90 cents. Rounding off or truncating that fraction of a cent would give you an exact number of cents, but not necessarily an accurate amount. Eventually, those truncations/roundings accumulate, and for the bank, who has to do that on everybody's account, it can amount to an appreciable amount of their money, one way or another.

The problem with FP16 is that it only calculates values to a 10-bit accuracy, which simply isn't enough when you have hundreds of thousands of atoms in a single protein.

Rounding of truncating floating point numbers does also occur, but not in the same way. In my example, I've assumed an unit of 1 cent and precision that stores 10 digits to the left of the round-off point. If you only have $ 150.00 in your account it would still be stored as 0000015000 cents which isn't a problem for the little guy, but for the business that might have a balance needing more than 10 digits of storage, there's no way around it within the confines of integer arithmetic and my assumptions. Within the confines of floating point, there's no problem representing either very large or very small numbers to the accuracy of the 23 bits of FP32 or the accuracy of 52 bits for FP64 since the exponent adjusts itself to accommodate scientific values that can be very large (10e38) or very small (10e-38).

Posting FAH's log:

How to provide enough info to get helpful support.

How to provide enough info to get helpful support.