PCI-e bandwidth/capacity limitations

Moderator: Site Moderators

Forum rules

Please read the forum rules before posting.

Please read the forum rules before posting.

PCI-e bandwidth/capacity limitations

I'm in the process of planning a new rig for DC applications (FAH, BOINC, whatever). One issue I have is that the amount of GPUs I wish to purchase will exceed the number of PCI-e slots available on a single board (planned GPU farm, using x1 slots as well as x16).

Something I came across a while back was some sort of PCI-e splitter, if I understand the product correctly (Link to product page). Does anyone have any experience with this hardware, or know if it would work for our needs in DC projects? Essentially, can this product take a 6 slot motherboard, and expand its capacity to 9, 12, 15, 18, 21, or 24 cards by attaching, 1, 2, 3, 4, or 5 splitters to the existing slots respectively? If so, what performance limitations (if any) would there be?

I find this interesting mostly because it would simplify system management. One rig, instead of 2 or 3. Plus, with only one rig, there's no need for an additional motherboard, CPU, RAM or drives, also reducing power consumption.

Interested in your thoughts.

Something I came across a while back was some sort of PCI-e splitter, if I understand the product correctly (Link to product page). Does anyone have any experience with this hardware, or know if it would work for our needs in DC projects? Essentially, can this product take a 6 slot motherboard, and expand its capacity to 9, 12, 15, 18, 21, or 24 cards by attaching, 1, 2, 3, 4, or 5 splitters to the existing slots respectively? If so, what performance limitations (if any) would there be?

I find this interesting mostly because it would simplify system management. One rig, instead of 2 or 3. Plus, with only one rig, there's no need for an additional motherboard, CPU, RAM or drives, also reducing power consumption.

Interested in your thoughts.

Last edited by hiigaran on Fri Jun 17, 2016 4:40 pm, edited 1 time in total.

Re: PCI-e splitter?

I have no experience with that product, but the performance issue will depend on what GPUs you plan to use. Most GPUs from previous generations are more limited by GPU processing than by PCIe transfers. More recent GPUs have enough processing capability for them to be somewhat limited by data transfer speed.

I have used a 1x to 16x cable converter (with no splitter) had have had trouble getting GPUs to work at 1x speed. I don't know if it's possible, but I need some time to experiment. I can't speak for BOINC/whatever, but I don't remember any GPUs supported by FAH that are designed for a slot that's narrower than 16x so you'll probably need a 16x to N*16x splitter.

If you do proceed with this plan, be sure to let us know what you learn.

I have used a 1x to 16x cable converter (with no splitter) had have had trouble getting GPUs to work at 1x speed. I don't know if it's possible, but I need some time to experiment. I can't speak for BOINC/whatever, but I don't remember any GPUs supported by FAH that are designed for a slot that's narrower than 16x so you'll probably need a 16x to N*16x splitter.

If you do proceed with this plan, be sure to let us know what you learn.

Posting FAH's log:

How to provide enough info to get helpful support.

How to provide enough info to get helpful support.

Re: PCI-e splitter?

To my understanding, the only time PCI-e bandwidth is used is during the initial stage of each WU, when they are assigned to the GPU. Utilization of x1 slots or x1 bandwidth has been common from 2009 (the oldest Google result I found), and I know a couple of people who successfully run GPU folding farms over x1 without any performance difference, on modern cards.

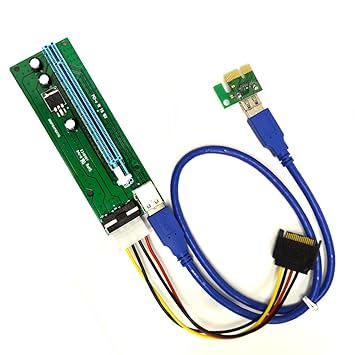

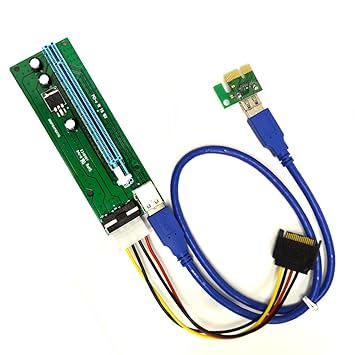

In your particular case, what kind of converter are you talking about? Was it using a ribbon cable, by any chance? Like the kind old IDE cables used? The issues with those are that they need to be shielded cables. The ones used in GPU farms, like the ones used by the aforementioned people I know, plus others who run mining farms, are typically hooked up using similar converters, or risers that are x1 at the mobo end, and x16 at the GPU end. Instead of connecting via ribbon cables, they use a USB 3.0 cable, where each end is mapped to x1. Typically just called USB flexible risers:

So while I know that these work, the issue is trying to figure out some cost-effective way to increase motherboard PCI-e capacity, without resorting to dropping $10K on a backplane and host board, or running multiple systems, hence why the prospect of a PCI-e splitter seems rather enticing.

In your particular case, what kind of converter are you talking about? Was it using a ribbon cable, by any chance? Like the kind old IDE cables used? The issues with those are that they need to be shielded cables. The ones used in GPU farms, like the ones used by the aforementioned people I know, plus others who run mining farms, are typically hooked up using similar converters, or risers that are x1 at the mobo end, and x16 at the GPU end. Instead of connecting via ribbon cables, they use a USB 3.0 cable, where each end is mapped to x1. Typically just called USB flexible risers:

So while I know that these work, the issue is trying to figure out some cost-effective way to increase motherboard PCI-e capacity, without resorting to dropping $10K on a backplane and host board, or running multiple systems, hence why the prospect of a PCI-e splitter seems rather enticing.

-

Joe_H

- Site Admin

- Posts: 8329

- Joined: Tue Apr 21, 2009 4:41 pm

- Hardware configuration: Mac Studio M1 Max 32 GB smp6

Mac Hack i7-7700K 48 GB smp4 - Location: W. MA

Re: PCI-e splitter?

You are misunderstanding how the GPU is used during folding. The folding core running on the CPU prepares blocks of data to be processed on the GPU, receives them back from the GPU and sends new blocks for processing almost continuously over the PCIe bus. How frequently the data is transferred depends on how fast the GPU processes each batch. The bandwidth is used all of the time, and not just during the initial stage. More bandwidth is used by a fast GPU like a GTX 980 than by a slower one such as a GTX 750 ti.hiigaran wrote:To my understanding, the only time PCI-e bandwidth is used is during the initial stage of each WU, when they are assigned to the GPU

Re: PCI-e splitter?

Is this the way F@H is programmed, or how all GPU processing works? I'm curious because if I look at GPU mining for instance, hardware recommendations there completely sacrifice all CPU performance. And while I'm sidetracking here, why is it that some cards require a core per card, and others don't?

Anyway, if this limitation is a significant factor, what would be the fastest cards to use in x1 mode?

Anyway, if this limitation is a significant factor, what would be the fastest cards to use in x1 mode?

-

Nathan_P

- Posts: 1165

- Joined: Wed Apr 01, 2009 9:22 pm

- Hardware configuration: Asus Z8NA D6C, 2 x5670@3.2 Ghz, , 12gb Ram, GTX 980ti, AX650 PSU, win 10 (daily use)

Asus Z87 WS, Xeon E3-1230L v3, 8gb ram, KFA GTX 1080, EVGA 750ti , AX760 PSU, Mint 18.2 OS

Not currently folding

Asus Z9PE- D8 WS, 2 E5-2665@2.3 Ghz, 16Gb 1.35v Ram, Ubuntu (Fold only)

Asus Z9PA, 2 Ivy 12 core, 16gb Ram, H folding appliance (fold only) - Location: Jersey, Channel islands

Re: PCI-e splitter?

x1 folding does suffer a performance penalty, IIRC correctly going from x16 to x4 gives around a 20% penalty, I've not seen any numbers for x1 recently but I would estimate a another 5% drop.

You can get boards with 6 or 7 x16 slots but they are high end workstation boards and even then you will have the following headaches.

1. Even a pair of Xeons only has 80 PCIe lanes - this would give you 4 or 5 at x16 and the rest at x8 - not an issue at PCIe 3 speeds YET

2. The cards would have to be single slot unless you used risers - this means water cooling the cards.

3. You are going to need a pair of PSU's at least to power that many GPU's

4. I'm not sure how many GPU's the Client and F@H control can reliably run.

5. Veteran folders will tell you that any more than 3 gpu's on a single board causes headaches with stability and heat.

Its an ambitious goal and I'm not sure that you will get what you are looking for in terms of simplicity, cost or power consumption

You can get boards with 6 or 7 x16 slots but they are high end workstation boards and even then you will have the following headaches.

1. Even a pair of Xeons only has 80 PCIe lanes - this would give you 4 or 5 at x16 and the rest at x8 - not an issue at PCIe 3 speeds YET

2. The cards would have to be single slot unless you used risers - this means water cooling the cards.

3. You are going to need a pair of PSU's at least to power that many GPU's

4. I'm not sure how many GPU's the Client and F@H control can reliably run.

5. Veteran folders will tell you that any more than 3 gpu's on a single board causes headaches with stability and heat.

Its an ambitious goal and I'm not sure that you will get what you are looking for in terms of simplicity, cost or power consumption

-

Joe_H

- Site Admin

- Posts: 8329

- Joined: Tue Apr 21, 2009 4:41 pm

- Hardware configuration: Mac Studio M1 Max 32 GB smp6

Mac Hack i7-7700K 48 GB smp4 - Location: W. MA

Re: PCI-e splitter?

As I understand it, yes this is how most GPU processing works. The amount of data and commands that need to be sent to the GPU will vary by the type of work being done. It does not take a lot of CPU power to prepare the data to be processed and handle the I/O between the CPU, RAM and the GPU. So that is why some build recommendations are light on CPU performance. Though for a number of GPUs and large amounts of data going too low end may cause some slowdown of overall throughput on the GPUs.

As for why some cards require dedicated CPU cores and others don't, in the case of folding it is how the drivers were coded to handle OpenCL programming to be processed on the GPU. It appears in the case of the AMD drivers the CPU is only needed at the actual point of moving data to and from the GPU. While for the nVidia drivers, the CPU is in a constant wait cycle looking for those commands to transfer data, hence the need for the dedicated core.

There have been some reports that some of the larger projects benefit from having a dedicated CPU core when run on AMD GPUs. And similar reports that the faster nVidia GPU's get a small performance increase with 2 CPU cores free on these large projects.

As for why some cards require dedicated CPU cores and others don't, in the case of folding it is how the drivers were coded to handle OpenCL programming to be processed on the GPU. It appears in the case of the AMD drivers the CPU is only needed at the actual point of moving data to and from the GPU. While for the nVidia drivers, the CPU is in a constant wait cycle looking for those commands to transfer data, hence the need for the dedicated core.

There have been some reports that some of the larger projects benefit from having a dedicated CPU core when run on AMD GPUs. And similar reports that the faster nVidia GPU's get a small performance increase with 2 CPU cores free on these large projects.

Re: PCI-e splitter?

Your comparison of FAH farms to mining farms will have issues. FAH's primary purpose is scientific research and the proteins being analyzed today require A LOT of data. Some projects are being restricted because the main RAM required to hold the data is too small, and that data needs to be passed to the GPU as separate in chunks (maybe called "pages" but probably not the same sizes as RAM pages) -- and then pages of results are passed back. Even 2 fast GPUs push enough data through a 16x PCIe bus for it to approach continuous saturation.

Mining, on the other hand, can be accomplished with a relatively small sized program and a relatively small amount of data. I suspect that the processing does begin with a one-time event that fills the PCIe bus temporarily. and then remains mostly idle, so 1x would probably see very little performance degradation.

As I said earlier, I'm not aware of anybody doing this with FAH before, but we'd really appreciate a report about what you learn to either confirm or reject the suppositions about FAH that we're offering.

Mining, on the other hand, can be accomplished with a relatively small sized program and a relatively small amount of data. I suspect that the processing does begin with a one-time event that fills the PCIe bus temporarily. and then remains mostly idle, so 1x would probably see very little performance degradation.

As I said earlier, I'm not aware of anybody doing this with FAH before, but we'd really appreciate a report about what you learn to either confirm or reject the suppositions about FAH that we're offering.

Posting FAH's log:

How to provide enough info to get helpful support.

How to provide enough info to get helpful support.

-

toTOW

- Site Moderator

- Posts: 6531

- Joined: Sun Dec 02, 2007 10:38 am

- Location: Bordeaux, France

- Contact:

Re: PCI-e splitter?

I remember reading a number of bad experiences in mining forums with (powered) PCIe 1x to 16x risers ...

I the past, I used a 16x PCIe riser to fold on a 8800 GTS and it was doing pretty well, but in those days, FAH cores didn't use much PCIe bandwidth ... on Core 21 and a Maxwell GPU, GPUZ reports that I'm using ~45% of my PCIe 16x 2.0 slot ...

I the past, I used a 16x PCIe riser to fold on a 8800 GTS and it was doing pretty well, but in those days, FAH cores didn't use much PCIe bandwidth ... on Core 21 and a Maxwell GPU, GPUZ reports that I'm using ~45% of my PCIe 16x 2.0 slot ...

-

ChristianVirtual

- Posts: 1576

- Joined: Tue May 28, 2013 12:14 pm

- Location: Tokyo

Re: PCI-e splitter?

What I read somewhere that those MOLEX connector are not spec'ed to deliver 75W ... Understand that most of GPU power comes via PCIe 6/8 pin connector but still i would do more research on the MOLEX to avoid thermal surprises.

Also watch the the PSU where the MOLEX is connected to has enough juice to deliver and not too many Y-cable/splitter.

Also watch the the PSU where the MOLEX is connected to has enough juice to deliver and not too many Y-cable/splitter.

Re: PCI-e splitter?

... or add an external PSU.ChristianVirtual wrote:Also watch the the PSU where the MOLEX is connected to has enough juice to deliver and not too many Y-cable/splitter.

Posting FAH's log:

How to provide enough info to get helpful support.

How to provide enough info to get helpful support.

Re: PCI-e splitter?

Huh...Maybe I should just invest in mining rigs, recover the costs, and use the profit to give other people folding hardware instead...

-

foldy

- Posts: 2040

- Joined: Sat Dec 01, 2012 3:43 pm

- Hardware configuration: Folding@Home Client 7.6.13 (1 GPU slots)

Windows 7 64bit

Intel Core i5 2500k@4Ghz

Nvidia gtx 1080ti driver 441

Re: PCI-e splitter?

Why not expand it step by step? First fill one mainboards pcie slots with GPUs and see if performance goes down much with each added GPU.

If it's still running fast enough add first pci-e splitter and fill with GPUs and so on.

This will give you the max number of GPUs that your PC can handle without much performance loss.

Then you can decide if you just keep this one PC or expand to second PC and so on.

I don't know your pcie splitter product in detail but there are also pcie switches.

The difference is a splitter will divide the bandwidth equally to all connectors.

A switch instead can give full bandwidth to each connector temporarily when the other connectors are currently not busy.

If all are full busy then it is the same bandwidth like with the splitter.

It is used e.g. at this board to get PCIe 3.0 4x16.

http://www.asrock.com/mb/Intel/X79%20Extreme11/

Or as server board with dual CPU PCIe 3.0 8x16

https://www.supermicro.com/products/sys ... 7GR-TR.cfm

If it's still running fast enough add first pci-e splitter and fill with GPUs and so on.

This will give you the max number of GPUs that your PC can handle without much performance loss.

Then you can decide if you just keep this one PC or expand to second PC and so on.

I don't know your pcie splitter product in detail but there are also pcie switches.

The difference is a splitter will divide the bandwidth equally to all connectors.

A switch instead can give full bandwidth to each connector temporarily when the other connectors are currently not busy.

If all are full busy then it is the same bandwidth like with the splitter.

It is used e.g. at this board to get PCIe 3.0 4x16.

http://www.asrock.com/mb/Intel/X79%20Extreme11/

Or as server board with dual CPU PCIe 3.0 8x16

https://www.supermicro.com/products/sys ... 7GR-TR.cfm

Re: PCI-e splitter?

Okay, I'm still confused, and getting a lot of conflicting information from my finds. What I know in theory:

The Z170 chipset supports 20 PCI-e lanes. Combined with 16 supported lanes by the CPU, and thats 36 PCI-e 3.0 lanes to work with. Each 3.0 version lane has 20% reduced overhead, compared to 2.0, and can deliver an effective 985 MB/s per lane.

Sources for this information:

http://www.hardocp.com/article/2015/08/ ... 0SrPt_I4y4

https://en.wikipedia.org/wiki/PCI_Expre ... xpress_3.0

First thing that confuses me, is the information above. Assuming I have understood the information correctly, a GPU running on a 3.0 x1 can transfer almost a gig of data per second. Is that much data actually being used?

Second thing that is confusing me, and one of a couple of sources of conflicting information is that some people are claiming that x1 works with no noticeable performance drop. One in particular is running a triple GPU setup with a GA-AM1M-S2H board that uses PCI-e 2.0. Half the bandwidth of 3.0, and the GPUs are running on x1. I'm currently waiting on a reply to a message I've sent to someone else, asking about their specs, but this person also claims to be running x1 cards without any issues.

I don't want to sound like I don't believe what people are saying here, please don't get me wrong. I just want to make sure that I have accurate information from people who have tested their hardware in the applicable scenarios. What hardware have you guys tested performance with (primarily directed at Joe and Nathan, but also anyone else who has tried)?

The Z170 chipset supports 20 PCI-e lanes. Combined with 16 supported lanes by the CPU, and thats 36 PCI-e 3.0 lanes to work with. Each 3.0 version lane has 20% reduced overhead, compared to 2.0, and can deliver an effective 985 MB/s per lane.

Sources for this information:

http://www.hardocp.com/article/2015/08/ ... 0SrPt_I4y4

https://en.wikipedia.org/wiki/PCI_Expre ... xpress_3.0

First thing that confuses me, is the information above. Assuming I have understood the information correctly, a GPU running on a 3.0 x1 can transfer almost a gig of data per second. Is that much data actually being used?

Second thing that is confusing me, and one of a couple of sources of conflicting information is that some people are claiming that x1 works with no noticeable performance drop. One in particular is running a triple GPU setup with a GA-AM1M-S2H board that uses PCI-e 2.0. Half the bandwidth of 3.0, and the GPUs are running on x1. I'm currently waiting on a reply to a message I've sent to someone else, asking about their specs, but this person also claims to be running x1 cards without any issues.

I don't want to sound like I don't believe what people are saying here, please don't get me wrong. I just want to make sure that I have accurate information from people who have tested their hardware in the applicable scenarios. What hardware have you guys tested performance with (primarily directed at Joe and Nathan, but also anyone else who has tried)?

-

Nathan_P

- Posts: 1165

- Joined: Wed Apr 01, 2009 9:22 pm

- Hardware configuration: Asus Z8NA D6C, 2 x5670@3.2 Ghz, , 12gb Ram, GTX 980ti, AX650 PSU, win 10 (daily use)

Asus Z87 WS, Xeon E3-1230L v3, 8gb ram, KFA GTX 1080, EVGA 750ti , AX760 PSU, Mint 18.2 OS

Not currently folding

Asus Z9PE- D8 WS, 2 E5-2665@2.3 Ghz, 16Gb 1.35v Ram, Ubuntu (Fold only)

Asus Z9PA, 2 Ivy 12 core, 16gb Ram, H folding appliance (fold only) - Location: Jersey, Channel islands

Re: PCI-e splitter?

I haven't tested the low end, I run my GPU's on a Z9PE-D8 WS with a E5-2665 CPU giving 40 lanes, due to only having 1 cpu installed at the moment my 970 is running at PCIe 2.0 x8 so I don't see quite the same performance as a full x16 but its not much. Its late here in the UK so when I'm back on tomorrow i'll see if I can locate the page with the folding performace at different slot speeds on it. My rig should be back up next week so I can do some testing for you.