foldy wrote:I guess the phone guy meant if your GPU would have an auxiliary power connection to the power supply, then you would need to check the power supply's wattage at 12V and not the total wattage. So a 300 watt power supply may only have 200 watt on the 12V line, so it could not feed a 250+ watt GPU like the GTX 1080 Ti.

Or if you have several GPUs in the case then you need to add up the 75 watt of your 1050 Ti plus the x watts of your other GPUs to match the power supply wattage at 12V - not total wattage.

But your single gtx 1050 Ti is fine with your 400 watt power supply, cause I guesswill have 250 watts at 12V which is enough for the gtx 1050 Ti and your CPU (and maybe another gtx 1050 Ti).

Thanks for this. We were discussing the PCIe v2.0 X16 card slot itself and I'm pretty sure I told him there would be no PSU connection to the card. Plus, both cards in consideration are rated at 75W Power Consumption from the PCIe slot (not 250W+), and I told him that, too. But just in case...

The Cooler Master 400W PSU Specs on the sticker are:

+3.3V: 20A

+5.0V: 16A (120W is a combined total for +3.3V and +5.0V)

[with 15W from +3.3V for the GPU] see note in red below

+12V1: 16A

+12V2: 16A (276W is a combined total for +12V1 & +12V2.)

[with 60W from +12V for the GPU] see note in red below

-12V: 0.8A, 9.6W

+5VSB: 2.5A, 12.5W

+3.3V & +5V & +12V total output shall not exceed 327.9W.

I'm guessing that +12V1 is for the motherboard, and +12V2 is for the wired connectors.

jrweiss wrote:Voltage stability is important for GPUs. If you have a cheap PSU or are operating a low-output PSU near its limit, it may not be able to maintain voltage during power spikes.

The 12V rail is most important for GPUs. A good PSU will be able to put virtually its entire rated output to the 12V rail if needed. If its 12V rating is significantly less than total output rating, get a new PSU.

Thanks. It's a Cooler Master 400W PSU, so I think I'm OK on Quality.

I found the answer,

worded the way I needed to hear it, in a discussion in a support thread at TechPowerUp.

Someone asked...

Q: What is the max power (watts) output of a pcie v1.0 x16 slot?

A: PCI-E 1.0 is 60W. Devices draw power from 12V and 3.3V, and cannot exceed 75W between the two.

Q:

How about for pcie 2.0 x16?

A: Same thing, as far as I know, added 8-pin for high-power spec (where the actual power increase comes from) and the speed increase. There's something about PCI-E cabling (for server-type stuff), the automatic speed and link-width dynamic changes for power saving, and other such stuff unrelated to this conversation.

Q: So does this mean that slot powered gpu's like (my) hd4670 can only draw a max of 60watts from the pcie slot?

A: No, its 75W from the slot, some being 3.3V and some being 12V. That's the most any PCI-E card can use, graphics or not.

A:

It's 75 Watts from the slot itself. A combination of 60W from 12V and 15W from 3.3V.

I'm going with this. This said it in a way that I could understand. Thanks again to both of you!

.

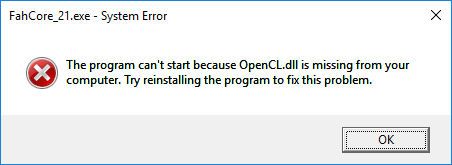

I avoided the MS installation and got the Driver directly via GeForce Experience. But as JimboPalmer suggested, I did a reinstall using Custom & Clean Install. That fixed me right up. However, it is very possible that MS did the dirty on me during a Windows Update between my two installations. I think there were two Windows Updates in between. But now, with your suggestion, I know what to look for and how to handle it. Thanks again!

I avoided the MS installation and got the Driver directly via GeForce Experience. But as JimboPalmer suggested, I did a reinstall using Custom & Clean Install. That fixed me right up. However, it is very possible that MS did the dirty on me during a Windows Update between my two installations. I think there were two Windows Updates in between. But now, with your suggestion, I know what to look for and how to handle it. Thanks again!